| Citation: | Qi Yang, Lei Fan, Ran Li, et al., “Research on the registration and fusion algorithm of terahertz SAR images,” Chinese Journal of Electronics, vol. x, no. x, pp. 1–10, xxxx. DOI: 10.23919/cje.2024.00.272 |

The processing of terahertz (THZ) synthetic aperture radar (SAR) images holds significant application value in target characteristic analysis and intelligent recognition, rendering it a current research focal point. The article investigates image registration methods based on SIFT, SURF, and KAZE operators. An enhanced RANSAC algorithm incorporating forward preprocessing is developed to accomplish the registration and fusion of terahertz SAR images, addressing the research gap in high-noise and low-contrast terahertz image registration. The research findings suggest that the KAZE operator is better suited for terahertz SAR image registration. Additionally, this study conducted fusion on various terahertz images, demonstrating that image fusion can enhance information content and reduce noise. This research contributes to the application of terahertz SAR images in fields such as target characteristic analysis and recognition.

The terahertz (THZ) wave was widely investigated for its better transmission characteristics and the interference immunity than the optical waves. And it was less influenced by the solar radiance, thermal radiation and the particle distribution of the time varying atmosphere. The advantages of the terahertz radar over the microwaves radar were that the THZ radar bandwidth was wide, there was high resolution of the THZ images, and the THZ wave was more sensitivity to the Doppler shift. It represents a significant emerging technique in the domains of target detection, tracking, and identification with extensive application prospects in both military and civilian sectors. Recently, research areas like image processing based on terahertz SAR imaging and target recognition have gained considerable attention. However, due to the long wavelength of terahertz waves and limitations in hardware and software capabilities, images generated by terahertz imaging systems suffer from challenges including high noise levels, low contrast visibility, and substantial loss of edge information. Consequently improving the quality of terahertz SAR images has become a prominent focus of research.

The enhancement of terahertz radar SAR image quality can be achieved through two approaches, namely image processing and signal processing [1]-[3]. Enhancing SAR image quality through signal processing requires the acquisition of echo parameters, which often present challenges in terms of storage and computational complexity, resulting in significant costs in manpower and resources. As a result, publicly available SAR images are commonly utilized. Therefore, it is crucial to investigate image quality enhancement methods based on advanced image processing techniques [4]-[6].Image quality can be enhanced through advanced techniques such as super-resolution reconstruction or image fusion [7]-[9]. Due to the high level of noise and a low signal-to-noise ratio in terahertz SAR images, as well as the challenges associated with acquiring such images, only a limited number of researchers have explored methods for enhancing the quality of terahertz SAR images. Considering the unique characteristics of terahertz imagery, image fusion techniques are particularly suitable due to their ability to preserve complementary and redundant information from multiple sources, thereby improving image quality while simultaneously reducing noise levels [10]-[12]. Image registration is essential prior to the image fusion process [13]-[15]. The current registration methods primarily rely on the utilization of SIFT, SURF, and KAZE operators to extract feature points from diverse images [16]-[18].The Random Sample Consensus (RANSAC) algorithm is employed to filter feature points from different images, however, this approach often fails when dealing with high-noise images [19], [20]. The present article introduces an improved RANSAC algorithm that incorporates a feedforward preprocessing technique for the purpose of image registration and fusion.

The present study proposes an enhanced RANSAC algorithm with a forward preprocessing approach, based on SIFT, SURF, and KAZE operators, to achieve image registration and fusion in order to enhance the quality of terahertz SAR images. This research provides valuable support for target characterization analysis and recognition in terahertz SAR imagery.

The presence of significant noise and the absence of texture information in terahertz image sequences impose limitations on their application in target feature extraction and intelligent recognition. Additionally, due to antenna aperture constraints, only a small central portion of the image contains valid information. Therefore, it is imperative to investigate techniques for terahertz image registration and fusion to enhance practicality while reducing image noise.

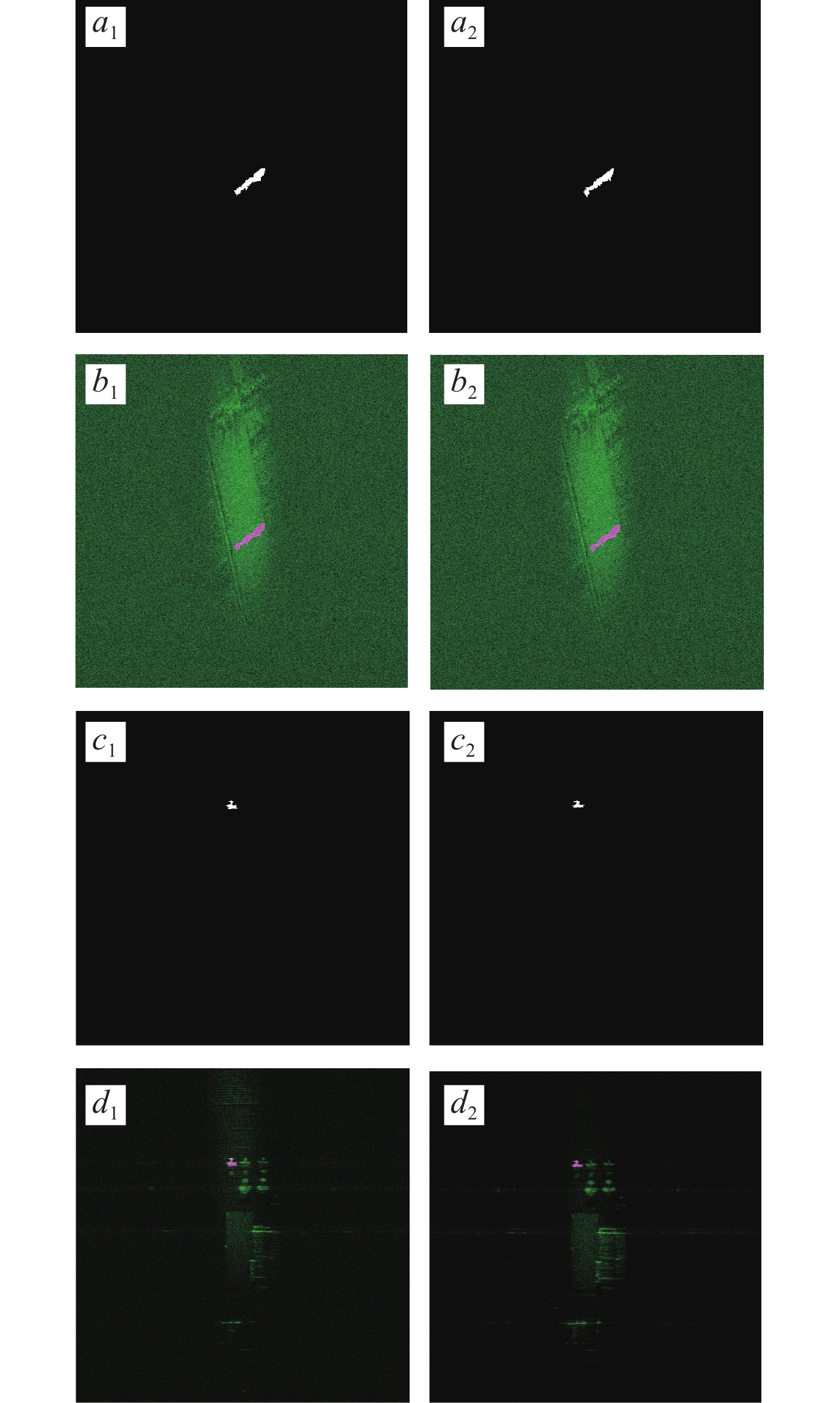

The THZ image as the Figure 1 exhibits a low signal-to-noise ratio and is further constrained by the antenna aperture, resulting in only a small central section of the image containing valid information. This poses challenges for image registration and fusion.

Image registration plays a pivotal role in enhancing image quality and facilitating image fusion, serving as the fundamental basis for this process. This study explores a corner point matching-based algorithm for image registration.

The invariance of feature keypoints under different scales and rotations is a crucial property. Feature point detection algorithms aim to capture the stable characteristics of an image by identifying local extrema points, which are subsequently utilized for feature matching across various images [21]. This study investigates the extraction and registration of typical feature points such as SIFT, SURF, and KAZE.

The SIFT algorithm is a feature extraction technique based on scale space. It applies Gaussian filters to the original image at multiple scales in order to extract key points, their corresponding scales and orientations, as well as feature vectors. The extracted feature operators can be utilized for image registration.

The scale space of an image I(x,y) at different scales can be represented by convolving the image with a Gaussian kernel:

|

L(x,y,σ)=G(x,y,σ)×I(x,y) |

(1) |

|

G(x,y,σ)=12πσ2e−x2+y22σ2 |

(2) |

G(x,y,σ) is a scale-variable Gaussian function, represents the scale-space factor, and (x,y) is the pixel position of the image.

The scale variation feature is overcome by subtracting the adjacent upper and lower layer images in the scale space, which results in a Gaussian difference pyramid (DoG pyramid). This process effectively captures the contour features of the target:

|

D(x,y,σ)=L(x,y,kσ)−L(x,y,σ) |

(3) |

the scale difference k represents the ratio between two consecutive levels.

The local extreme position, which corresponds to the keypoint location and its corresponding scale, is obtained by comparing each pixel in the DoG scale space with its surrounding 8 pixels within the same layer, as well as 18 pixels from the upper and lower layers. To eliminate initially searched unstable feature points, methods such as curve fitting are employed due to the possible presence of weak contrast or strong edge respon、se among candidate feature points. After identifying the feature points, their directions are determined using gradient functions. Statistical analysis of gradient values and direction information around each feature point is then conducted to calculate both angle m and magnitude θ of gradients:

|

m(x,y)=√(L(x+1,y)−L(x−1,y))2+(L(x,y+1)−L(x,y−1))2 |

(4) |

|

θ(x,y)=arctan[L(x,y+1)−L(x,y−1)L(x+1,y)−L(x−1,y)] |

(5) |

The SURF algorithm is an enhanced version of the SIFT algorithm, utilizing the Hessian matrix for detecting extrema points and extracting local features. It streamlines the Gaussian template, resulting in expedited feature extraction and matching speed as well as fortified robustness.

For an image I and a certain pixel point X=(x,y), the Hessian matrix at scale is defined as:

|

H(X,σ)=(Lxx(X,σ)Lxy(X,σ)Lxy(X,σ)Lyy(X,σ)) |

(6) |

The variable Lxx(X,σ), Lxy(X,σ), Lyy(X,σ)represents the convolution of image Iat point (x,y) with the second-order Gaussian derivative ∂2[g(σ)]/∂x2, ∂2[g(σ)]/(∂x∂y) and ∂2[g(σ)]/∂y2. g(σ) is the probability density function of the Gaussian distribution.

|

g(σ)=12πσ2e−x2+y22σ2 |

(7) |

The SURF algorithm constructs a scale space by employing box filters of varying sizes. To compensate for the introduced approximation error resulting from the utilization of box filters, it becomes imperative to multiply Lxy by a weighting factor of 0.9 in order to rectify the discrepancy. The expression is as follows:

|

det(H)=Lxx×Lyy−(0.9×Lxy)2 |

(8) |

After establishing the scale space, the pixel extrema of this scale space can be obtained by approximating the Hessian matrix. These extrema are compared with 26 neighboring pixels in adjacent scale spaces to determine a set of feature points using non-maximum suppression. Finally, a Taylor expansion is applied to the Hessian matrix to eliminate low-contrast feature points from the set.

The KAZE algorithm is an adaptive feature extraction technique based on a nonlinear scale space that exhibits invariance to variations such as rotation, scaling, and illumination. It can effectively extract a larger number of feature points to enhance the registration effect.

The KAZE algorithm constructs a pyramid-shaped nonlinear scale space using the variable conductance diffusion method and the additive operator splitting algorithm (AOS), similar to building a Gaussian pyramid with SIFT.

Since nonlinear diffusion filtering is performed in terms of time units, it becomes necessary to convert the scale parameter into time units:

|

ti=12σ2i,(i=0…N) |

(9) |

The total number of layers in the pyramid is represented by N.

The nonlinear scale space of image I can be obtained after applying the AOS algorithm:

|

Li+1=[I−(ti+1−ti)·m∑l=1Al(Li)]−1Li |

(10) |

The formula represents the image after undergoing Gaussian filtering, where L denotes the resulting image, i∈[0,N−1],t represents time, and Al(Li) signifies the conductivity matrix in multiple dimensions.

After constructing a nonlinear scale space, each point is compared with the pixel grayscale values in the surrounding 3×3 neighborhood as well as two layers of spatial information above and below to identify local maxima points of the Hessian matrix as feature points. The formula for calculating the Hessian matrix remains unchanged:

|

LHessian=σ2(LxxLyy−L2xy) |

(11) |

After detecting the position of feature points, the precise location of these points is determined through Taylor expansion.

The THZ images collected in this article exhibit significant noise and a lack of texture information. The feature points obtained through coarse registration are typically disordered, while the correctly registered points are often sparse, with significantly fewer numbers compared to the incorrectly matched points. Image registration presents an exceptionally challenging task. Therefore, based on features with minimal variation in aircraft trajectory, this article proposes an enhanced RANSAC algorithm that incorporates slope consistency and integrates pre-processing of matching point pairs.

Step 1. The image deflection angle for measuring THZ images of the aircraft is set within a range of ±10 degrees, as there were no abrupt changes in direction observed, θ=20∘.

Step 2. The direction angles of matching point pairs should be arranged and classified based on the consistency of slopes. Begin with an initial value n=1, and divide them into intervals. If there are only a few points within the interval, proceed to step 4; otherwise, move on to step 3.

Step 3. Random Sample Consensus algorithm (RANSAC) is utilized to eliminate outliers in the matching points and compute the positional error of the matches. Subsequently, proceed to step 2 and progressively narrow down the range of angular directions until the calculated error E falls below a specified threshold value.

|

E=|Bi−Ai⋅TM|2,i=1,2,3,4… |

(12) |

The matching point in the second frame image is denoted as Bi, while Ai represents the matching point in the first frame image. The registration matrix TM is obtained through least squares using Ai and Bi.

Step 4. The coarse tentative matching pairs are successively reduced based on the RANSAC algorithm principle until the minimization of calculation error E is achieved.

The quality of image registration in different frames is assessed by employing a fixed reference object within the same scene.

A clustering algorithm is used to extract stationary objects from various frame images, and masks are generated as the figure 3. The degree of overlap between these masks serves as an evaluation metric for assessing image registration quality and comparing the accuracy of different registration methods.

The registration accuracy is quantified by the mask overlap rate, denoted as P, in accordance with the subsequent formula:

|

P=SA∩SBSA+SB−SA∩SB |

(13) |

The areas SA and SB represent the respective coverage of distinct frame image masks, while SA∩SB denotes their overlapping region.

Image fusion serves as a crucial technique for enhancing image quality, as it effectively mitigates image noise while simultaneously incorporating valuable information into the images.

The present article employs a weighted image fusion method to effectively eliminate stitching gaps by utilizing the average grayscale value of overlapping region pixels for seamless image integration.

|

F(x,y)={F1(x,y)0.5F1(x,y)+0.5F2(x,y)F2(x,y)(x,y)∈F1(x,y)∈(F1∩F2)(x,y)∈F2 |

(14) |

The grayscale values of F1 and F2 represent two frames of images, while the grayscale value of F corresponds to the fused image. The coordinates (x, y) indicate the pixel location associated with the respective grayscale value.

This article utilizes the grayscale normalized standard deviation of the overlapping region mask in fused images as a metric for quantifying the denoising effect. Furthermore, image entropy is employed to assess the level of information richness present in these fused images.

The normalized standard deviation D of the gray level in the overlapping region of the mask can be expressed as follows:

|

D=1N√∑(Fi(x,y)⋅Mask−∑Fi(x,y)·Mask/N)2∑Fi(x,y)⋅Mask/N,(x,y)∈Mask |

(15) |

The variable Mask represents the intersection area between the mask of the first frame image and the registered mask of the second frame. N denotes the total number of pixels within the mask, while Fi indicates the grayscale value of the image.

The original text states that the dielectric constant of the fixed reference object surface in the image is assumed to be uniform, with a consistent grayscale value represented by the average grayscale. A smaller standard deviation indicates reduced image noise.

The image entropy EN is commonly utilized as a metric for quantifying the information content within an image. A higher value of image entropy indicates that the image retains more comprehensive information, resulting in enhanced clarity.

|

EN=−∑aPA(a)logPA(a) |

(16) |

The variable a represents the grayscale value of an image, whereas PA(a) denotes the probability distribution function of grayscale.

The SIFT, SURF, and KAZE algorithms were used in this article for image registration

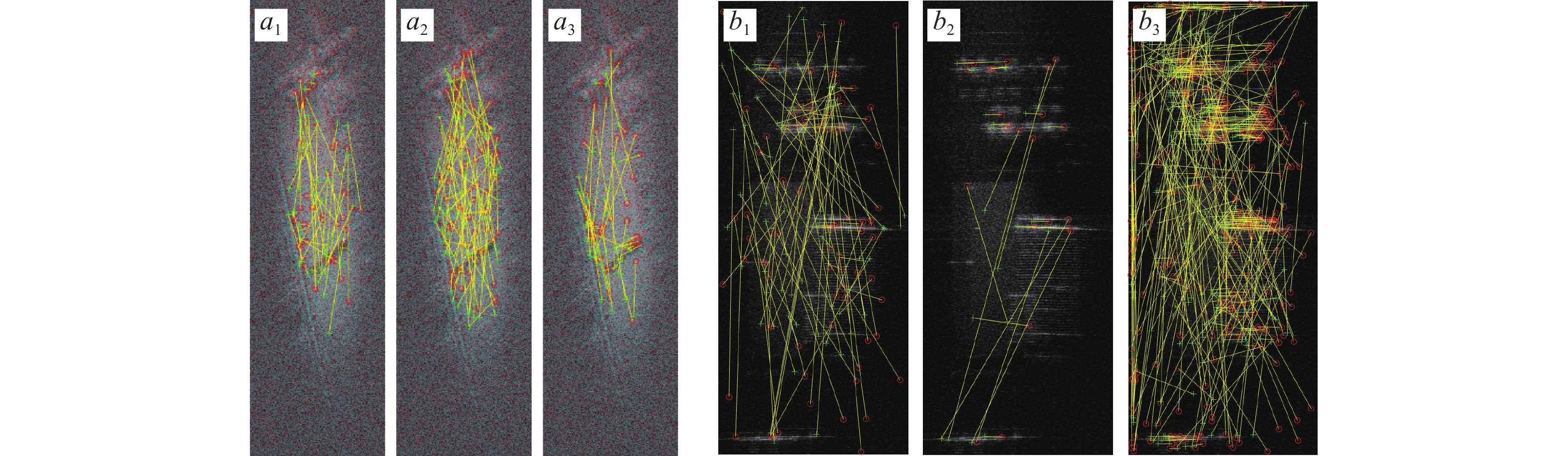

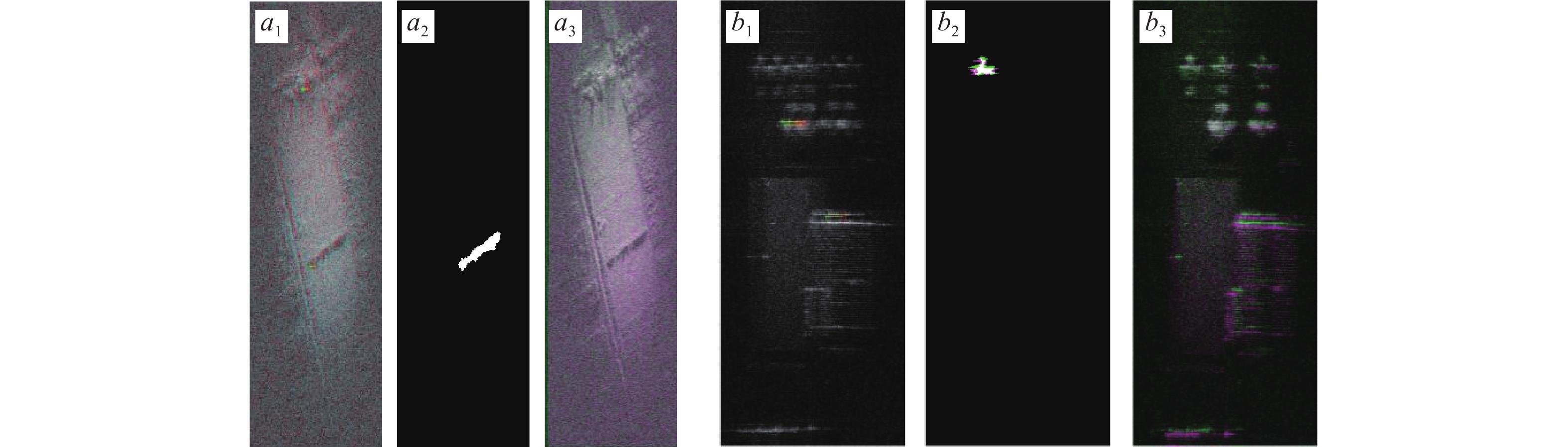

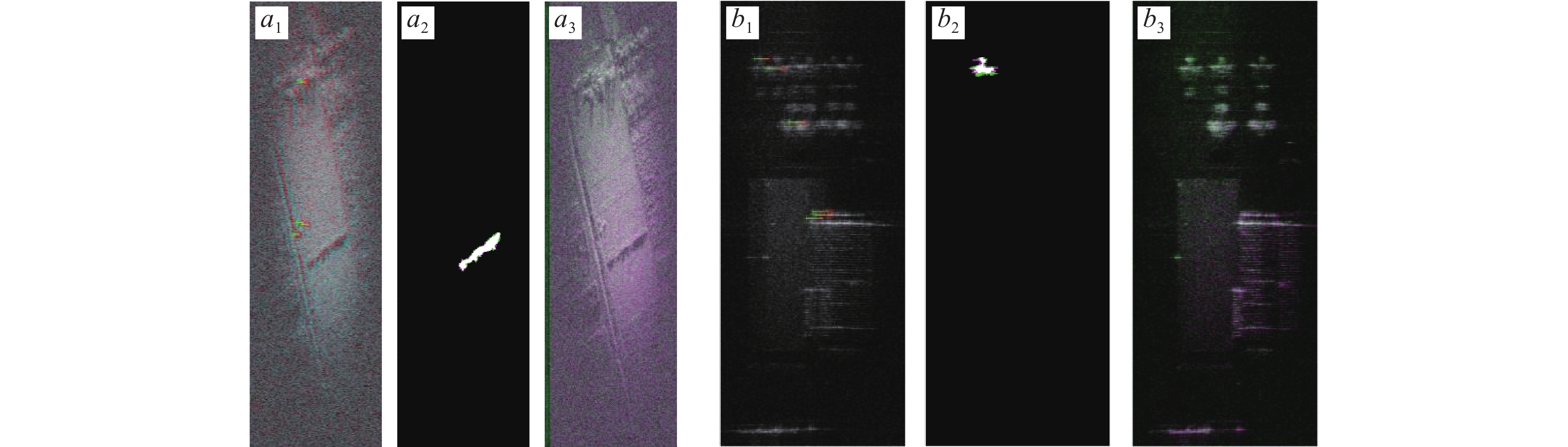

The THZ images exhibit uneven noise with significant fluctuations, and their lack of texture features as depicted in Figure 4 results in an irregular distribution of directional matches for corner points, posing challenges for image matching. Coarse registration results obtained using SIFT, SURF, and KAZE operators for corner point extraction are shown in Figure 5, where only a few pairs of correctly matched corner points are identified. However, algorithms such as BRISK, MSER, and ORB fail to achieve coarse registration as they struggle to extract any usable corner points. Directly applying the RANSAC algorithm to eliminate inconsistent matches from the coarsely registered corner points extracted by SIFT, SURF and KAZE algorithms as illustrated in Figure 6 does not yield accurate registration due to a significantly larger number of incorrect matches compared to correct ones. The data lacks consistency and cannot be directly utilized for precise image alignment using the RANSAC algorithm. Therefore, preprocessing and filtering of feature point matches in the image is necessary. Since the edge signal information in the image is insufficiently clear and for better visualization purposes, the following figures display cropped versions of the original image.

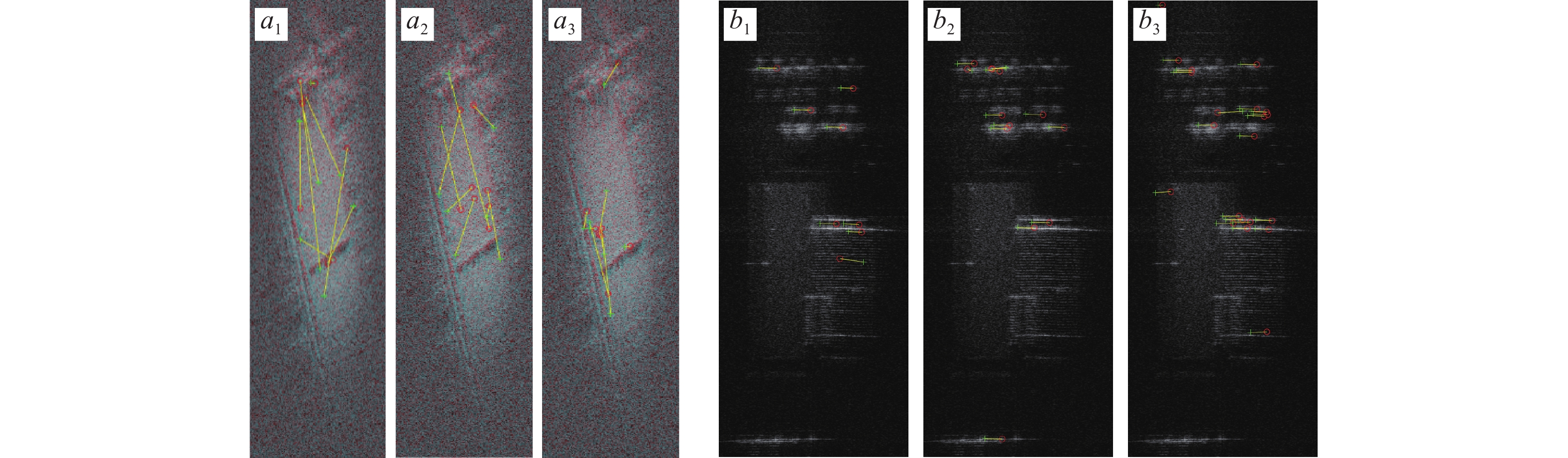

The registration results obtained using an improved RANSAC algorithm with preprocessing are depicted in Figures 7, 8, and 9. Figures 7a1, 8a1, 9a1, 7b1, 8b1, and 9b1 demonstrate the matched feature point pairs, which exhibit consistent slope and distance characteristics. In terms of extracting correct feature point pairs, the KAZE algorithm outperforms the SIFT and SURF algorithms as shown in Table 1. Figures 7a2, 8a2, 9a2, 7b2, 8b2, and 9b2 illustrate the overlap between the first frame mask and the registered transformed second frame masks for SIFT, SURF, and KAZE algorithms respectively. Notably, the KAZE algorithm achieves a remarkable mask overlap rate of 80.85%. It is important to note that these masks represent roads that may enter or exit the field of view during aerial flight; hence they are not completely identical between two images but maintain complex shapes that remain unchanged over time. As two images become more similar in nature, their mask overlap rate increases accordingly. Since there is typically no scenario where multiple transformation matrices correspond to a single overlap rate value. Therefore, it can be utilized as a parameter to measure registration accuracy effectively. Finally, figures 7a3, 8a3, 9a3, 7b3, 8b3, and 9b3 display composite images of different frames after being registered using SIFT, SURF and KAZE algorithms respectively. The registered images appear clearer after undergoing denoising.

| Algorithm | Unmatched image | SIFT | SURF | KAZE |

| Coarse registration | - | 47 | 75 | 46 |

| Consistent slope registration | - | 5 | 5 | 6 |

| precise registration | - | 2 | 2 | 4 |

| P | 35.09% | 77.88% | 78.36% | 80.85% |

The registration results of this study are presented in Table 1. Following image registration, a significant improvement was observed in the mask overlap rate. Notably, the KAZE operator-based registration yielded the most favorable outcome, exhibiting a substantially higher number of matching points and overlap rate compared to SIFT and SURF algorithms. The KAZE algorithm demonstrated superior performance and exhibited heightened robustness.

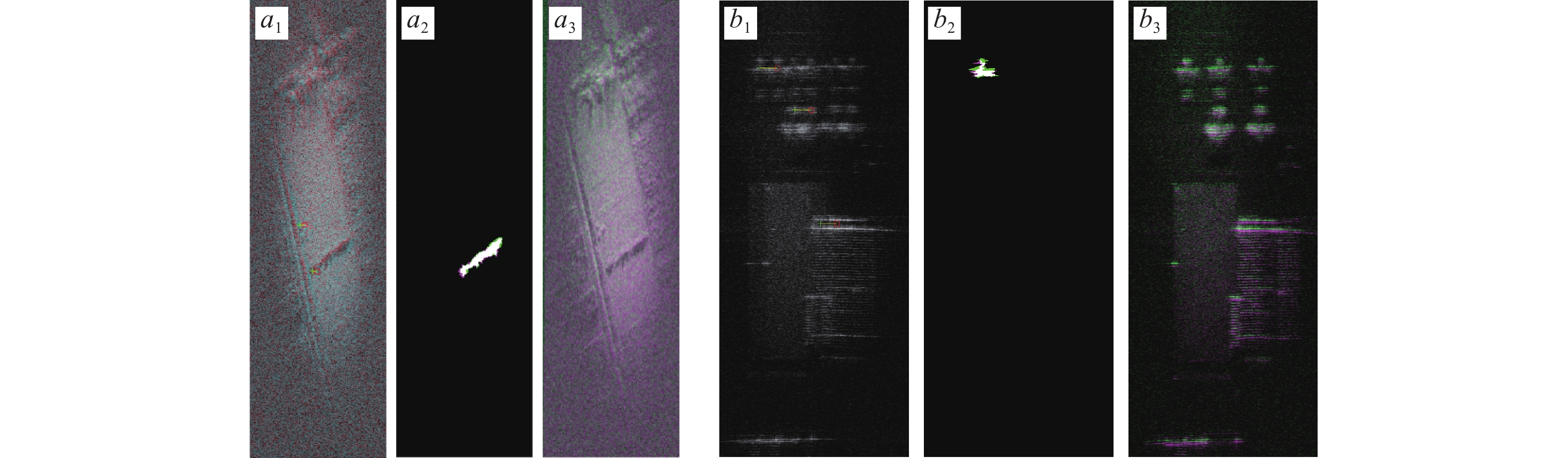

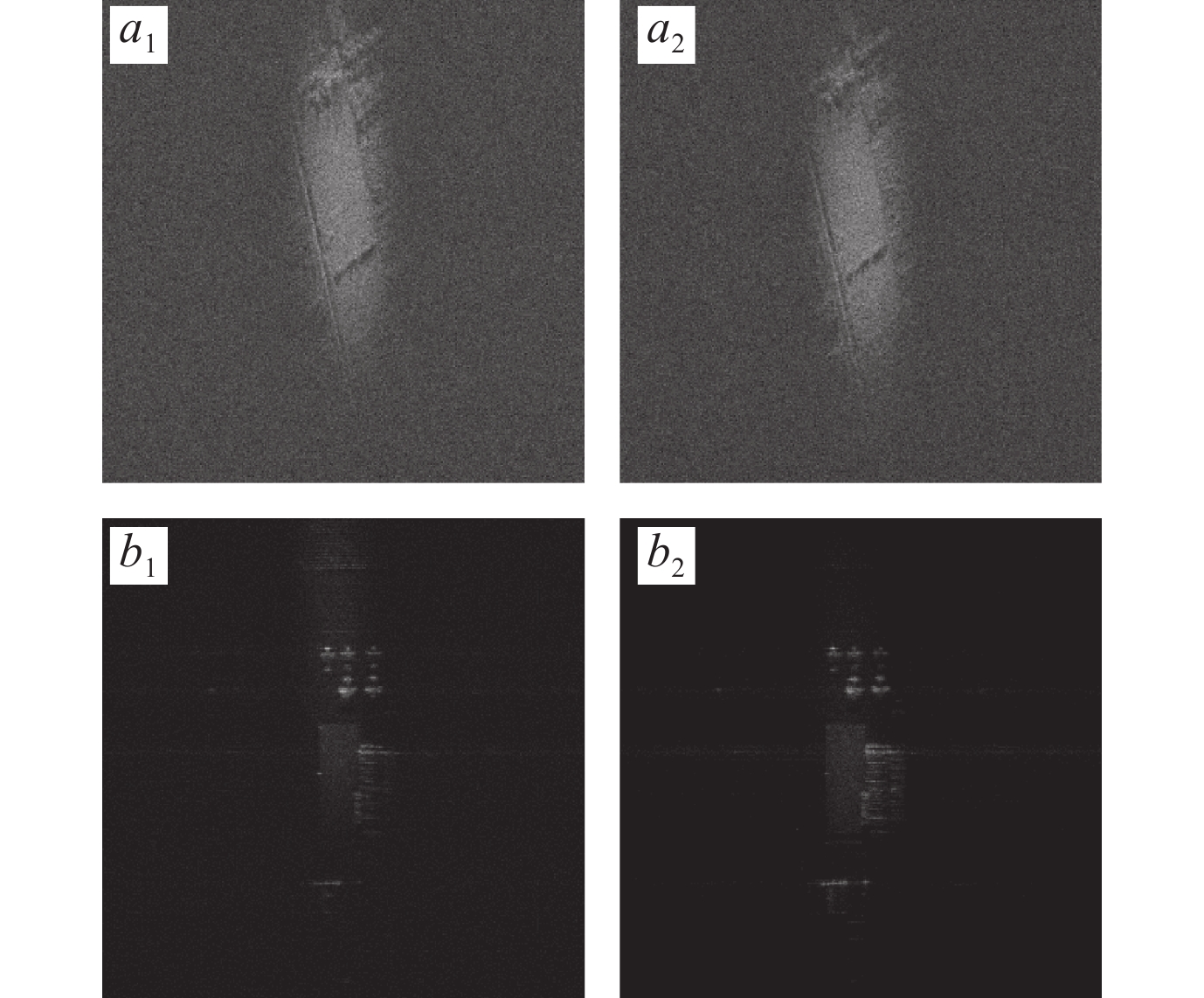

The image is first subjected to Gaussian filtering in order to mitigate noise, followed by normalization of the filtered image as depicted in Figure 10. Directly fusing the images without the registration leads to image blurring.

The present article is based on the utilization of SIFT, SURF, and KAZE algorithms for the purpose of image registration and fusion. It can be observed from Figure 11 that the fused image effectively enhances the informational content of the original image. Furthermore, Table 2 demonstrates that the fused image exhibits a higher level of entropy in comparison to its original counterpart. Moreover, it is worth noting that through image fusion, noise reduction within the images can be achieved as evidenced by Table 2 where there is a significant decrease in normalized standard deviation.

| Algorithm | Unmatched image | SIFT | SURF | KAZE |

| Coarse registration | - | 77 | 25 | 427 |

| Consistent slope registration | - | 8 | 13 | 21 |

| precise registration | - | 3 | 4 | 6 |

| P | 9.11% | 50.74% | 53.72% | 56.66% |

| Algorithm | First frame | Second frame | SIFT | SURF | KAZE |

| D | |||||

| EN |

| Algorithm | First frame | Second frame | SIFT | SURF | KAZE |

| D | |||||

| EN |

The standard deviation of the mask in Table 2 represents the normalized gray-level standard deviation within the masked region. The masked region consists of a single land surface type with approximately similar dielectric constants, thus attributing the gray-level standard deviation on its mask primarily to noise interference. A smaller normalized standard deviation indicates reduced image noise. In this article, the term "mask" refers to the overlapping area between the mask of the first frame image and the transformed mask of the registered second frame. The fused image exhibits lower levels of noise compared to its original counterpart, demonstrating that image fusion possesses an inherent capability for noise reduction. Leveraging KAZE algorithm, fused images exhibit minimal noise and enhanced registration accuracy while simultaneously increasing information content.

The article investigates the registration and fusion methods of terahertz sequence images. In this study, an improved RANSAC algorithm with a forward preprocessing method is developed to address the challenging problem of registering image sequences with strong noise. The KAZE operator demonstrates stronger robustness in the algorithm for registering images with strong noise, resulting in a significantly higher number of correctly matched point pairs compared to SIFT and SURF algorithms, thus making it more suitable for such scenarios. Furthermore, it is demonstrated that image registration and fusion algorithms can enhance the amount of information in an image while reducing image noise.

This work was supported in part by Science and Technology Innovation Program of Hunan Province under No. 2024RC3143, in part by the National Natural Science Foundation of China under Grant

| [1] |

W. H. Yeo, S. H. Jung, S. J. Oh, et al., “J-Net: Improved U-net for terahertz image super-resolution,” Sensors, vol. 24, no. 3, article no. 932, 2024. DOI: 10.3390/s24030932

|

| [2] |

X. R. Li, D. Mengu, N. T. Yardimc, et al., “Plasmonic photoconductive terahertz focal-plane array with pixel super-resolution,” Nature Photonics, vol. 18, no. 2, pp. 139–148, 2024. DOI: 10.1038/s41566-023-01346-2

|

| [3] |

S. Hu, X. Y. Ma, Y. Ma, et al., “Terahertz image enhancement based on a multiscale feature extraction network,” Optics Express, vol. 32, no. 19, pp. 32821–32835, 2024. DOI: 10.1364/OE.529260

|

| [4] |

L. Fan, H. Q. Wang, Q. Yang, et al., “High-quality airborne terahertz video SAR imaging based on echo-driven robust motion compensation,” IEEE Transactions on Geoscience and Remote Sensing, vol. 62, article no. 2001817, 2024. DOI: 10.1109/TGRS.2024.3357697

|

| [5] |

L. Fan, H. Q. Wang, Q. Yang, et al., “THz-ViSAR-oriented fast indication and imaging of rotating targets based on nonparametric method,” IEEE Transactions on Geoscience and Remote Sensing, vol. 62, article no. 5217515, 2024. DOI: 10.1109/TGRS.2024.3427653

|

| [6] |

A. Reigber, R. Scheiber, M. Jager, et al., “Very-high-resolution airborne synthetic aperture radar imaging: Signal processing and applications,” Proceedings of the IEEE, vol. 101, no. 3, pp. 759–783, 2013. DOI: 10.1109/JPROC.2012.2220511

|

| [7] |

M. Elad and A. Feuer, “Super-resolution reconstruction of image sequences,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 21, no. 9, pp. 817–834, 1999. DOI: 10.1109/34.790425

|

| [8] |

Y. T. Chen, L. W. Liu, V. Phonevilay, et al., “Image super-resolution reconstruction based on feature map attention mechanism,” Applied Intelligence, vol. 51, no. 7, pp. 4367–4380, 2021. DOI: 10.1007/s10489-020-02116-1

|

| [9] |

Y. C. Hung, W. T. Su, T. H. Chao, et al., “Terahertz deep learning fusion computed tomography,” Optics Express, vol. 32, no. 10, pp. 17763–17774, 2024. DOI: 10.1364/OE.518997

|

| [10] |

W. N. Zhang, “Robust registration of SAR and optical images based on deep learning and improved Harris algorithm,” Scientific Reports, vol. 12, no. 1, article no. 5901, 2022. DOI: 10.1038/s41598-022-09952-w

|

| [11] |

Z. J. Wang, D. Ziou, C. Armenakis, et al., “A comparative analysis of image fusion methods,” IEEE Transactions on Geoscience and Remote Sensing, vol. 43, no. 6, pp. 1391–1402, 2005. DOI: 10.1109/TGRS.2005.846874

|

| [12] |

L. F. Tang, X. Y. Xiang, H. Zhang, et al., “DIVFusion: Darkness-free infrared and visible image fusion,” Information Fusion, vol. 91, pp. 477–493, 2023. DOI: 10.1016/j.inffus.2022.10.034

|

| [13] |

B. Zitová and J. Flusser, “Image registration methods: A survey,” Image and Vision Computing, vol. 21, no. 11, pp. 977–1000, 2003. DOI: 10.1016/S0262-8856(03)00137-9

|

| [14] |

X. Xiong, G. W. Jin, Q. Xu, et al., “Robust SAR image registration using rank-based ratio self-similarity,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 14, pp. 2358–2368, 2021. DOI: 10.1109/JSTARS.2021.3055023

|

| [15] |

W. N. Zhang and Y. Q. Zhao, “An improved SIFT algorithm for registration between SAR and optical images,” Scientific Reports, vol. 13, no. 1, article no. 6346, 2023. DOI: 10.1038/s41598-023-33532-1

|

| [16] |

D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” International Journal of Computer Vision, vol. 60, no. 2, pp. 91–110, 2004. DOI: 10.1023/B:VISI.0000029664.99615.94

|

| [17] |

P. F. Alcantarilla, A. Bartoli, and A. J. Davison, “KAZE features,” in Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, pp. 214–227, 2012.

|

| [18] |

Y. H. Chang, Q. Xu, X. Xiong, et al., “SAR image matching based on rotation-invariant description,” Scientific Reports, vol. 13, no. 1, article no. 14510, 2023. DOI: 10.1038/s41598-023-41592-6

|

| [19] |

P. H. S. Torr and A. Zisserman, “MLESAC: A new robust estimator with application to estimating image geometry,” Computer Vision and Image Understanding, vol. 78, no. 1, pp. 138–156, 2000. DOI: 10.1006/cviu.1999.0832

|

| [20] |

X. Shen, F. Darmon, A. A. Efros, et al., “RANSAC-flow: Generic two-stage image alignment,” in Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, pp. 618–637, 2020.

|

| [21] |

M. Hassaballah, A. A. Abdelmgeid, and H. A. Alshazly, “Image features detection, description and matching,” in Image Feature Detectors and Descriptors, A. I. Awad and M. Hassaballah, Eds. Springer, Cham, pp. 11–45, 2016.

|

| Algorithm | Unmatched image | SIFT | SURF | KAZE |

| Coarse registration | - | 47 | 75 | 46 |

| Consistent slope registration | - | 5 | 5 | 6 |

| precise registration | - | 2 | 2 | 4 |

| P | 35.09% | 77.88% | 78.36% | 80.85% |

| Algorithm | Unmatched image | SIFT | SURF | KAZE |

| Coarse registration | - | 77 | 25 | 427 |

| Consistent slope registration | - | 8 | 13 | 21 |

| precise registration | - | 3 | 4 | 6 |

| P | 9.11% | 50.74% | 53.72% | 56.66% |

| Algorithm | First frame | Second frame | SIFT | SURF | KAZE |

| D | |||||

| EN |

| Algorithm | First frame | Second frame | SIFT | SURF | KAZE |

| D | |||||

| EN |