| Citation: | Yike Sun, Xiaogang Chen, Yijun Wang, et al., “Connecting minds and devices: a fifty-year review of brain-computer interfaces,” Chinese Journal of Electronics, vol. x, no. x, pp. 1–10, xxxx. DOI: 10.23919/cje.2023.00.325 |

This review provides a detailed examination of the evolution of brain-computer interfaces (BCIs), a pioneering segment of neurotechnology that facilitates a connection between the human brain and digital devices without surgical procedures. It delves into the historical progression and the advancements in BCIs, particularly emphasizing non-invasive techniques that have significantly altered the interactions of individuals with disabilities with their environment. While recognizing the contributions of other BCI modalities, this analysis predominantly focuses on non-invasive non-implantation strategies, tracing their trajectory from theoretical models to practical implementations. The review dissects the pivotal developments and technological milestones that have shaped the BCI domain, integrating perspectives from neuroscience, engineering, and computer science. It chronologically highlights key breakthroughs, demonstrating the evolution of non-invasive BCIs from rudimentary experiments to established neurotechnology, expanding opportunities for communication and control. Standing on the precipice of a new chapter in human-technology integration, this review highlights the transformative potential of BCIs and advocates for ongoing innovation and the careful contemplation of their broader impact on cognitive functions and societal structures.

The term “brain-computer interface (BCI)” first emerged in official publications more than half a century ago. An essential milestone in the history of BCIs occurred in 1973 when Vidal pioneered a BCI system grounded in visual evoked potentials within his laboratory, coining it as the “BCI system” [1]. The formal recognition of the BCI nomenclature was realized in 1999 during the inaugural international conference dedicated to this subject [2]. Although the quest for a universally accepted scientific definition of BCI has persisted, Wolpaw’s 2012 characterization remains an extensively cited reference, wherein he defines BCI as “a system that measures central nervous system (CNS) activity and converts it into artificial output that replaces, restores, enhances, supplements, or improves natural CNS output, thereby altering the ongoing interaction between the CNS and its external or internal environment” [3]. It is crucial to underline that this definition underscores the emphasis on the measurement of signals originating from the CNS, which encompasses both the brain and the spinal cord, distinguishing itself from the peripheral nervous system (PNS).

Over the last fifty years, the conceptual framework of Brain-Computer Interface (BCI) technology has undergone significant expansion. In 2021, scholars proposed a broader interpretation of BCI, characterizing it as a system facilitating direct engagement between the human brain and an external apparatus—hereafter referred to as a generalized BCI [4]. This inclusive definition acknowledges emerging developments, including the incorporation of feedback mechanisms into brain-computer interactions and the convergence of neurocomputing with artificial intelligence (AI). Significantly, modern BCI systems have initiated a new phase of bidirectional communication, surpassing the earlier model of one-directional instructions from the human brain to the machine. This advancement pertains to the reciprocal flow of data: neural signals inform machine operations while the machine, in turn, delivers feedback detectable by the user’s brain. Consequently, this establishes a comprehensive loop of interaction, enabling users not only to manipulate devices using their cerebral activity but also to receive and process feedback from the devices, thereby enhancing the interactive experience [5].

In a holistic sense, the realm of BCI occupies the vanguard of cutting-edge scientific and technological innovation, straddling the confluence of disciplines such as brain science, neural engineering, AI, and information technology. Its profound influence reverberates across the spectrum of scientific inquiry, medical treatment paradigms, educational methodologies, industrial applications, and entertainment domains. In light of the meteoric ascent of AI, the dimension of human-computer interaction assumes an increasingly pivotal role, with BCI emerging as the apotheosis of this interactional tapestry, delineating the contours of human-AI engagement. The issues of how individuals ought to interface with machines and how human intelligence can be symbiotically harnessed with AI interaction represent the quintessential quandaries that stand to be addressed by the ambit of BCI.

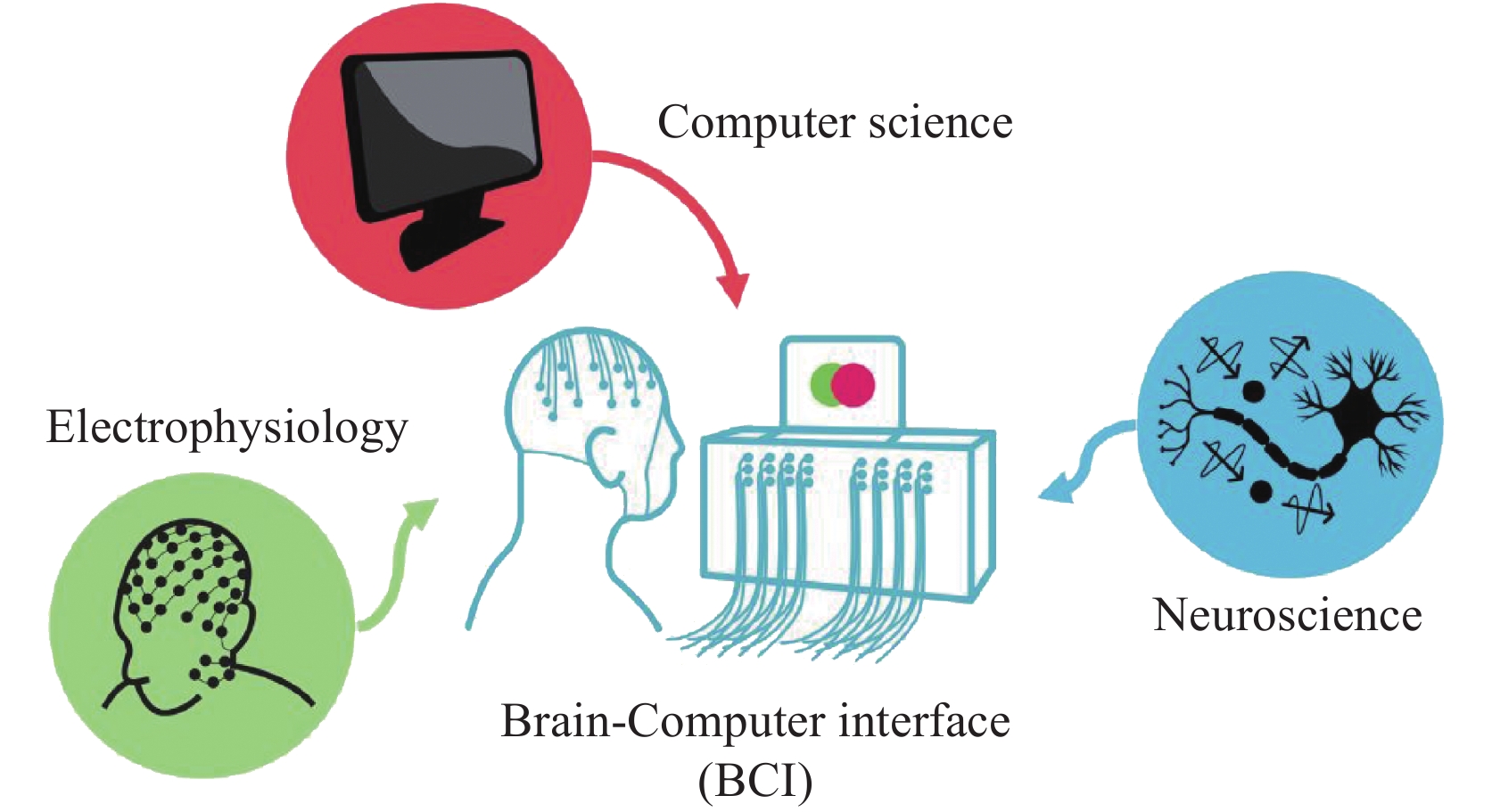

The evolution of BCI technology did not unfold abruptly but rather emerged as a culmination of interdisciplinary efforts. Its genesis was intricately interwoven with advancements in electrophysiology, computer science, and neuroscience. Indeed, the foundational roots of BCI technology can be traced back to pivotal moments in the early 20th century. The journey commenced in 1924 when Hans Berger made history by recording human electroencephalogram (EEG) signals, an event widely recognized as the dawn of electrophysiology [6]. Notably, the conceptual framework of BCI began to take shape in 1938, when Herbert Jasper conveyed the notion of decoding language through brain waves in a holiday greeting card to Hans Berger [7]. This correspondence served as the original prototype of BCI technology, marking a significant stride in the realm of electrophysiology. Electrophysiology, thus, became the inaugural piece of the BCI puzzle, establishing a direct link between the human brain and computers.

The second crucial piece required for the birth of BCI was the evolution of computer science. The advent of the world’s first general-purpose computer, the ENIAC, on February 14, 1946, at the University of Pennsylvania marked a milestone in computational history [8]. In subsequent decades, the relentless progress of computer science laid the foundation for digital signal processing and artificial intelligence, indispensable components that underpinned BCI technology.

The third pivotal facet of the BCI puzzle was neuroscience. Since the early anatomical elucidation of the nervous system, endeavors to harness neural control have persisted. A watershed moment occurred in 1969 when Eberhard Erich Fetz applied the principle of operant conditioning. Fetz demonstrated that the activity of a single neuron in the motor cortex of a primate could condition the control of an analog meter pointer [9]. This groundbreaking experiment marked the earliest practical application of BCI, charting the conclusive path to the realization of BCI technology and its fundamental principles. In essence, the development of BCI technology was not a solitary endeavor but an intricate interplay of electrophysiological insights, computational advancements, and neuroscientific discoveries. These symbiotic disciplines converged over decades, culminating in the multifaceted paradigm of BCI, which continues to shape the landscape of human-machine interface technologies.

Over the course of the last five decades, the evolution of BCI can be delineated into three distinct phases. The initial phase, spanning from 1973 to 1988, encompassed a 15-year period characterized by fervent discourse and debate within the academic community. The subsequent phase, spanning from 1988 to 2003, extended over 15 years of scholarly inquiry and research activities. This was succeeded by the third phase, from 2003 to 2023, a 20-year span during which BCI technology advanced significantly.

A pivotal moment in the history of BCI occurred in 1988, when Farwell and Donchin pioneered the utilization of the P300 component of Event-Related Potentials to devise the first P300-based BCI, employing the row and column scintillation coding paradigm for spellers, thus heralding the advent of the P300-BCI paradigm [10]. This seminal breakthrough formalized BCI technology’s emergence as a prominent discipline within academia. Another noteworthy development emerged in 1995, when the steady state visually evoked potential (SSVEP) was initially harnessed for BCI applications [11]. Subsequently, in 1999, SSVEP was formally established as a paradigm for BCI [12]. In 2004, a significant milestone was achieved with the introduction of the BARINGATE system, which marked the first instance of real-time BCI application on a patient [13]. The year 2019 witnessed the formal release of a BCI product by Neuralink [14], exemplifying the ongoing expansion of BCI technology into the commercial sector.

The architecture and technological components of BCI systems have been subject to continuous refinement, with each iteration building upon the previous. At present, a standard BCI system comprises five pivotal technologies, namely Paradigm, Signal Acquisition, Processing, Output, and Feedback. In the subsequent sections, we shall elucidate the current state of BCI development within the framework of these five fundamental technologies.

From a communicative standpoint, the BCI paradigm can be likened to a coding protocol whereby cerebral intentions are transcribed into signals originating from neural activity during specific cognitive tasks. Presently, each BCI system employs a distinctive method for signal encoding. From a paradigmatic viewpoint, BCI systems can be classified into three categories: Active, Reactive, and Passive [15].

Among these, the Active category pertains to signal encoding through active cognitive tasks, during which the user consciously selects a task [16], thereby inducing specific and pertinent alterations in the user’s EEG. Conventional paradigms like motor imagery (MI) [17]-[19] and slow cortical potentials [20]-[22] fall within the purview of Active BCI. Moreover, recent years have seen a surge in innovative approaches within this domain, including the use of handwriting imagery as a novel encoding strategy [23], among others. The tactile/somatosensory BCI forms an integral part of the Active category, incorporating techniques that leverage the steady-state somatosensory evoked potential (SSSEP) [24]-[26], in addition to those that harness tactile event-related desynchronization (ERD) [27 28]. Complementing the tactile/somatosensory BCI, motor BCI paradigms have also been explored, with a number of studies integrating both modalities to enhance system performance and user experience [29], [30].

Reactive BCI leverages perceptual stimuli, such as visual and auditory cues, to induce evoked potentials that serve as a means for signal encoding. This approach is currently recognized as the most widely adopted paradigm within the field of BCI techniques. Prominent paradigms encompassed by Reactive BCI include the P300 [31]-[34], SSVEP [35]-[37], Rapid Serial Visual Presentation (RSVP) [38]-[40], Code-Modulated Visual Evoked Potentials [41], and Auditory Steady-State Response paradigms [42]-[44]. The SSVEP paradigm is particularly distinguished for its precision and rapid response, attributable to the consistent frequency and signal resilience it demonstrates. Despite its merits, the comfort level associated with SSVEP remains less than ideal, which has catalyzed continued research into enhancing its user experience. Innovations in SSVEP paradigm development include the integration of higher refresh rates [45], the adoption of motion-based coding techniques [46], and the experimental application of dual-frequency stimulus coding [47]-[50].

Passive BCI, in contrast, serves as a tool for monitoring the user’s mental state and subsequently adapting the application without necessitating the transmission of voluntary commands through the neural signal. It involves encoding factors such as attention and emotional states, with a focus on analyzing the current state of the brain, excluding the induction of additional components. This approach exemplifies the capacity to seamlessly integrate user experience with real-time neural activity, thus enhancing the application’s adaptability and responsiveness. Techniques such as emotion recognition [51]-[53], error potential detection [54], [55], and the like fall within this category.

Jacques Vidal said a BCI is a device using EEG signals to work [1]. However, the landscape of BCI system architectures has undergone significant transformations over the years. While EEG remains the most prevalent signal acquisition technology, a plethora of new modalities has emerged. In 2023, we meticulously conducted an exhaustive categorization and survey of contemporary research on BCI signal acquisition techniques, classifying them into nine distinct categories, informed by both clinician and engineer perspectives [56].

From a clinician’s viewpoint, BCI signal acquisition techniques can be stratified as non-invasive, minimal-invasive, and invasive. Conversely, engineers categorize these techniques based on sensor placement into non-implantation, intervention, and implantation. The prevailing technology falls under the category of ‘non-invasive non-implantation’, encompassing EEG [57], [58], magnetoencephalography [59]-[61], functional Near-infrared Spectroscopy [62]-[65], and other methods for direct in vitro monitoring. Although these techniques offer high utility and carry minimal health risks, their signal quality is susceptible to various interferences. The second most popular category is ‘invasive implantation’, encompassing intracortical microelectrode arrays like the Utah Array [66], Michigan probes [67], stereotactic electroencephalography [68]-[70], Neuropixels [71], and similar approaches. While these methods boast a high theoretical upper limit of signal quality, they come with elevated health risks. In recent years, many scholars have developed new flexible implantation schemes by using new materials such as flexible silicon thin film transistors, carbon nanotubes and silk protein [72]-[75].

Recent years have witnessed the emergence of novel techniques that strike a delicate balance between signal quality and health risk, often concentrated within the middle segment of the nine-box classification system [56]. For instance, the ‘minimal-invasive intervention’ category features the Stentrode technique, employing a vascular stent as a carrier to significantly mitigate procedural risks while preserving signal fidelity [76], [77]. Similarly, the ‘non-invasive intervention’ category showcases the In-ear EEG method, which acquires robust signals by utilizing the ear canal, a natural human cavity, thereby obviating the need for surgery [78], [79]. Concurrently, innovations like minimally invasive local-skull electrophysiological modification (MILEM), classified under ‘minimal-invasive non-implantation’, achieve impressive signal quality through minimally invasive cranial procedures, complemented by extracorporeal sensors for signal monitoring [80]-[82]. These advancements represent promising directions, enhancing signal quality while circumventing the risks associated with implantation surgeries.

The processing of a BCI system encompasses both preprocessing and decoding stages. Preprocessing methods are fundamental and encompass a spectrum of techniques, including independent component analysis (ICA) [83], wavelet transform [84], as well as standard filtering operations. On the other hand, the decoding methodology is intricately linked with the pre-paradigm considerations and the signal acquisition module. In the historical evolution of BCIs, conventional approaches, such as support vector machines (SVM) [85] and linear discriminant analysis (LDA) [86], have enjoyed widespread adoption. However, the field has witnessed a rapid proliferation of specialized machine learning algorithms tailored to specific paradigms.

In 2006, researchers introduced the method of canonical correlation analysis (CCA) [87] as an efficacious tool for analyzing SSVEP paradigms. This approach was further advanced in 2015 with the introduction of the filter bank canonical correlation analysis (FBCCA) algorithm, designed to enhance high-speed spelling capabilities. In 2018, the functionality of CCA was expanded through its integration with task-related component analysis (TRCA) [37], facilitating personalized training adaptations for individual users.

For the motor imagery (MI) paradigm, a seminal development was the introduction of common spatial patterns (CSP) in 2000 [88], which is predicated on the principle of variance maximization to improve classification performance. This paradigm experienced further refinement in 2008 with the advent of the Filter Bank common spatial pattern (FBCSP) [89], which tailors CSP to the specific demands of the MI paradigm. Building on these foundations, contemporary research has yielded additional algorithms [90] that continue to enhance BCI technology.

Notably, the BCI field has witnessed the emergence of deep learning methods as a critical component due to their scalability and flexibility across various paradigms. In particular, convolutional neural networks have become a focal point of investigation, leading to the development of innovative architectures such as EEGNET [91]. Moreover, deep learning techniques, including generative adversarial networks (GANs), have been increasingly leveraged for data augmentation, substantially contributing to the advancement of BCI systems [92].

BCI systems generate a variety of outputs, which can be categorized into three main domains based on their application: Communication, Environmental Control and Virtual Worlds, and Neural Prosthetics [93]. The domain of Communication-focused outputs includes technologies such as spelling devices [21], [31], [36], [94] and speech synthesis systems [95], [96]. Spelling devices decode individual characters, similar to a keyboard, and are fundamental for producing textual outputs. Additionally, certain BCI systems that provide only binary responses, such as “yes” and “no”, are also included in this category.

Speech synthesis, on the other hand, utilizes single syllables as the basic units for decoding, typically generated through mental imagery or the imagined movement of pronunciation muscles, resulting in comprehensive spoken outputs. Furthermore, researchers have investigated advanced decoding techniques and the simulation of social behaviors in the context of decoding textual or speech signals [97], [98], which enhances the realism of communicative interactions.

Control-oriented BCI applications are crucial within the field, enabling the control of robots [16], [99], vehicles [100], exoskeletons [101], computer mice [102], [103], and wheelchairs [104], [105], among others. As BCI systems are responsible solely for generating control commands, these commands can be integrated seamlessly into virtual reality environments, thereby facilitating interactive control within virtual worlds [106], [107]. This integration is extensively applied in environmental simulations and gaming [108], [109].

The category of Neural Prosthetics primarily serves individuals with disabilities, aiming to restore or replace impaired neural functions using BCI technology. Applications in this category include motor rehabilitation, such as restoring walking capabilities [110], and addressing neurological disorders like epilepsy and Parkinson’s disease [111], [112].

The feedback component holds immense significance within the realm of BCI systems, profoundly influencing their overall performance. Particularly in the context of BCI training, the efficacy of feedback mechanisms is pivotal as they play a crucial role in activating the learning mechanisms within the subject’s brain. This activation, in turn, serves to mitigate fluctuations in background mental activity and minimizes interference from various sources of electrical noise, thereby enhancing the system’s robustness and reliability [113].

The feedback mechanisms inherent in BCIs can be examined through two distinct yet complementary lenses: the cognitive and the experiential. The cognitive lens elucidates the impact of reward and punishment dynamics on user behavior. These dynamics are instantiated through immediate modifications in the participants’ scores or perceptions of correctness, providing concrete benchmarks for performance assessment [114], [115]. Employing the tenets of reinforcement learning, this feedback paradigm enables users to refine their actions to align with predefined goals. The iterative process of behavioral adjustment is driven by the systematic evaluation of performance outcomes, facilitated by the reward and punishment contingencies.

Conversely, the experiential lens concentrates on sensory feedback. This aspect incorporates advanced methods, such as electrical or magnetic stimulation, to convey feedback in a manner that is directly perceptible to users [116]-[118]. This sensory-oriented approach eschews abstract quantifiers such as scores in favor of translating information into stimuli that engage users’ sensory faculties. Sensory feedback thus fosters an instinctive and immediate grasp of the BCI’s operations, offering a more integrated and direct mode of communication within the system.

The confluence of cognitive and experiential feedback methodologies not only enriches the learning curve within BCIs but also amplifies the quality of the user interface. By synthesizing insights from cognitive neuroscience with cutting-edge technology, this dual-strategy approach markedly propels the sophistication of BCI systems. Such progress highlights the capacity for BCIs to be customized to the unique requirements of users, paving the way for broader adoption across diverse domains.

The evolution of the BCI field over the last five decades is conspicuous. Nonetheless, this domain still grapples with formidable challenges, with paramount among them being the issue of BCI biocompatibility. The inherent incongruity between the human biological system, which is carbon-based, and the silicon-based architecture of computers necessitates the resolution of this conundrum for the attainment of the coveted goal of human-computer interoperability. Numerous endeavors by researchers have sought to address this predicament, including the exploration of novel flexible materials with enhanced biocompatibility [119], [120].

However, we posit a novel concept here, namely the utilization of autologous cells in the construction of BCI devices. This idea, widely acknowledged as a viable solution to circumvent biocompatibility issues [121], remains underutilized within the BCI field. Notably, Prox et al. in 2021 designed a deep brain stimulation (DBS) device wherein autologous neuronal cells and cardiomyocytes were encapsulated within an agar gel shell, offering a pivotal precedent [122]. This development prompts us to contemplate further possibilities.

In Figure 3, a groundbreaking autologous implanted BCI system is illustrated. Within our conceptualization, this innovative device is meticulously crafted from the subject’s own cells. Initially, a segment of neuron is surgically implanted in the brain, assuming a wire-like role, while its cytosol is cultured within minuscule perforations meticulously made in the skull. Functional muscles are intricately linked to its extremities, enabling a diverse array of functions. The left segment of Figure 3 delineates an autologous implanted BCI tailored for the interpretation of cerebral signals. Through neuronal ‘wires’, this system proficiently channels intricate brain signals to functional muscles, adeptly transforming these notoriously elusive neural cues into readily observable electromyographic signals. Meanwhile, the right segment of Figure 3 portrays an autologous implanted BCI devised for cerebral stimulation. In this configuration, the terminal muscle, functioning autonomously akin to a cardiomyocyte, contracts rhythmically. The neuronal ‘wires’ ingeniously convert these muscular contractions into electrical signals, which are then seamlessly integrated back into the brain.

This innovative design stands apart due to its inherent capacity to circumvent rejection, a challenge pervasive in traditional implantation methodologies. Furthermore, it heralds a realm of boundless possibilities, underscoring its transformative potential in the realm of neural engineering.

Research in the domain of BCI in China commenced later than in some other regions. However, propelled by rapid advancements in science and technology, along with substantial state investment, China has achieved significant strides in this field. Notably, in the realm of EEG-based BCIs, China has secured a leading position globally. The consistent hosting of the BCI-controlled robot competition for over a decade has not only showcased China’s advancements but has also catalyzed further development of BCIs within the nation [123]. The synergy across computer science, neuroscience, materials science, and electronic engineering is fostering a dynamic interdisciplinary research environment for BCIs in China, setting the stage for potential scientific and technological breakthroughs. Projections indicate that BCI technology in China will witness enhanced progress, particularly in the areas of algorithm optimization, signal processing, and the refinement of non-invasive and minimally invasive technologies.

In the last fifty years, BCI technology has evolved from speculative fiction to a tool with practical applications in industry. This shift from theoretical to practical is significant, indicating a new era of change and innovation. Looking forward, we expect the integration of advanced intracortical micro-electrode arrays, potentially comprising thousands of channels, within the next few decades. Such technology may mitigate biocompatibility challenges by incorporating patients’ own engineered tissues. We also foresee the rise of specialized nanomaterials and flexible electronics, which could lead to less invasive neural interfaces that integrate smoothly with brain tissue.

On the software side, increases in computational power are expected to enable real-time interpretation of complex neural patterns, leveraging the growing insights from connectomes. This progress could enable immersive neural virtual reality environments, providing experiences that surpass current limitations. BCI systems will likely become more sophisticated, allowing for natural and intuitive user communication, enhancing interaction levels.

Moreover, BCIs may extend beyond medical applications to improve cognitive functions like memory and attention in healthy individuals. They will be critical in restoring movement for those with paralysis through devices like spinal cord stimulators and exoskeletons. Neural implants could become key in managing mental health conditions and treating neurodegenerative diseases. Regular use of BCIs may allow for the sharing of mental states, enriching human interaction, and a global BCI network could facilitate collaborative ideation.

However, it is crucial to recognize and address the potential downsides of BCIs. Issues such as privacy infringement through widespread surveillance, the risk of cyberattacks on neural implants, and ethical dilemmas in military applications need to be addressed with strong safeguards and ethical guidelines.

In summation, the impending revolution in BCIs will exert a profound and far-reaching impact upon society, progressively drawing humanity closer to the futuristic landscapes depicted in works of science fiction, where technology augments cognitive capacities. The seamless fusion of human cognition and computational prowess will endow individuals with capabilities hitherto deemed superhuman, progressively erasing the distinctions between technology and humanity and indelibly reshaping our species. In the midst of this transformative epoch, judicious governance remains an imperative facet of our approach, but the vast potential of BCIs should galvanize us to welcome this thrilling future with eager anticipation, while maintaining a careful consideration.

Authors would like to thank Ziyu Zhang from Xiamen University and Yuqing Zhao from the Central Academy of Fine Arts for their help in drawing the pictures in this article.

| [1] |

J. J. Vidal, “Toward direct brain-computer communication,” Annual Review of Biophysics, vol. 2, no. 1, pp. 157–180, 1973. DOI: 10.1146/annurev.bb.02.060173.001105

|

| [2] |

J. R. Wolpaw, N. Birbaumer, W. J. Heetderks, et al., “Brain-computer interface technology: A review of the first international meeting,” IEEE Transactions on Rehabilitation Engineering, vol. 8, no. 2, pp. 164–173, 2000. DOI: 10.1109/tre.2000.847807

|

| [3] |

J. J. Shih, D. J. Krusienski, and J. R. Wolpaw, “Brain-computer interfaces in medicine,” Mayo Clinic Proceedings, vol. 87, no. 3, pp. 268–279, 2012. DOI: 10.1016/j.mayocp.2011.12.008

|

| [4] |

X. R. Gao, Y. J. Wang, X. G. Chen, et al., “Interface, interaction, and intelligence in generalized brain-computer interfaces,” Trends in Cognitive Sciences, vol. 25, no. 8, pp. 671–684, 2021. DOI: 10.1016/j.tics.2021.04.003

|

| [5] |

C. Hughes, A. Herrera, R. Gaunt, et al., “Bidirectional brain-computer interfaces,” Handbook of Clinical Neurology, vol. 168, pp. 163–181, 2020. DOI: 10.1016/B978-0-444-63934-9.00013-5

|

| [6] |

D. Millett, “Hans Berger: From psychic energy to the EEG,” Perspectives in Biology and Medicine, vol. 44, no. 4, pp. 522–542, 2001. DOI: 10.1353/pbm.2001.0070

|

| [7] |

J. R. Wolpaw and E. W. Wolpaw, “Brain-computer interfaces: Something new under the sun,” in Brain-Computer Interfaces: Principles and Practice, J. Wolpaw and E. W. Wolpaw, Eds. Oxford University Press, Oxford, UK, 2012.

|

| [8] |

S. McCartney, ENIAC: The Triumphs and Tragedies of the World’s First Computer. Walker & Company, New York, NY, USA, 1999.

|

| [9] |

E. E. Fetz, “Operant conditioning of cortical unit activity,” Science, vol. 163, no. 3870, pp. 955–958, 1969. DOI: 10.1126/science.163.3870.955

|

| [10] |

L. A. Farwell and E. Donchin, “Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials,” Electroencephalography and Clinical Neurophysiology, vol. 70, no. 6, pp. 510–523, 1988. DOI: 10.1016/0013-4694(88)90149-6

|

| [11] |

G. R. McMillan, G. L. Calhoun, M. S. Middendorf, et al., “Direct brain interface utilizing self-regulation of steady-state visual evoked response (SSVER),” in Proceedings of the RESNA ‘95 Annual Conference, Vancouver, Canada, pp. 693–695, 1995.

|

| [12] |

M. Cheng and S. K. Gao, “An EEG-based cursor control system,” in Proceedings of the First Joint BMES/EMBS Conference. 1999 IEEE Engineering in Medicine and Biology 21st Annual Conference and the 1999 Annual Fall Meeting of the Biomedical Engineering Society, Atlanta, GA, USA, article no. 669, 1999.

|

| [13] |

L. R. Hochberg, M. D. Serruya, G. M. Friehs, et al., “Neuronal ensemble control of prosthetic devices by a human with tetraplegia,” Nature, vol. 442, no. 7099, pp. 164–171, 2006. DOI: 10.1038/nature04970

|

| [14] |

E. Musk and Neuralink, “An integrated brain-machine interface platform with thousands of channels,” Journal of Medical Internet Research, vol. 21, no. 10, article no. e16194, 2019. DOI: 10.2196/16194

|

| [15] |

T. O. Zander and C. Kothe, “Towards passive brain–computer interfaces: Applying brain–computer interface technology to human–machine systems in general,” Journal of Neural Engineering, vol. 8, no. 2, article no. 025005, 2011. DOI: 10.1088/1741-2560/8/2/025005

|

| [16] |

A. E. Hramov, V. A. Maksimenko, and A. N. Pisarchik, “Physical principles of brain–computer interfaces and their applications for rehabilitation, robotics and control of human brain states,” Physics Reports, vol. 918, pp. 1–133, 2021. DOI: 10.1016/j.physrep.2021.03.002

|

| [17] |

M. Hamedi, S. H. Salleh, and A. M. Noor, “Electroencephalographic motor imagery brain connectivity analysis for BCI: A review,” Neural Computation, vol. 28, no. 6, pp. 999–1041, 2016. DOI: 10.1162/NECO_a_00838

|

| [18] |

J. H. Li and L. Q. Zhang, “Active training paradigm for motor imagery BCI,” Experimental Brain Research, vol. 219, no. 2, pp. 245–254, 2012. DOI: 10.1007/s00221-012-3084-x

|

| [19] |

M. Ahn and S. C. Jun, “Performance variation in motor imagery brain–computer interface: A brief review,” Journal of Neuroscience Methods, vol. 243, pp. 103–110, 2015. DOI: 10.1016/j.jneumeth.2015.01.033

|

| [20] |

N. Birbaumer, “Slow cortical potentials: Plasticity, operant control, and behavioral effects,” The Neuroscientist, vol. 5, no. 2, pp. 74–78, 1999. DOI: 10.1177/107385849900500211

|

| [21] |

N. Birbaumer, N. Ghanayim, T. Hinterberger, et al., “A spelling device for the paralysed,” Nature, vol. 398, no. 6725, pp. 297–298, 1999. DOI: 10.1038/18581

|

| [22] |

T. Hinterberger, S. Schmidt, N. Neumann, et al., “Brain-computer communication and slow cortical potentials,” IEEE Transactions on Biomedical Engineering, vol. 51, no. 6, pp. 1011–1018, 2004. DOI: 10.1109/TBME.2004.827067

|

| [23] |

F. R. Willett, D. T. Avansino, L. R. Hochberg, et al., “High-performance brain-to-text communication via handwriting,” Nature, vol. 593, no. 7858, pp. 249–254, 2021. DOI: 10.1038/s41586-021-03506-2

|

| [24] |

I. Choi, K. Bond, D. Krusienski, et al., “Comparison of stimulation patterns to elicit steady-state somatosensory evoked potentials (SSSEPs): Implications for hybrid and SSSEP-based BCIs,” in 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, pp. 3122–3127, 2015.

|

| [25] |

S. Ahn, K. Kim, and S. C. Jun, “Steady-state somatosensory evoked potential for brain-computer interface—present and future,” Frontiers in Human Neuroscience, vol. 9, article no. 716, 2016. DOI: 10.3389/fnhum.2015.00716

|

| [26] |

J. Petit, J. Rouillard, and F. Cabestaing, “EEG-based brain–computer interfaces exploiting steady-state somatosensory-evoked potentials: A literature review,” Journal of Neural Engineering, vol. 18, no. 5, article no. 051003, 2021. DOI: 10.1088/1741-2552/ac2fc4

|

| [27] |

L. Yao, N. Jiang, N. Mrachacz-Kersting, et al., “Reducing the calibration time in somatosensory BCI by using tactile ERD,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 30, pp. 1870–1876, 2022. DOI: 10.1109/TNSRE.2022.3184402

|

| [28] |

L. Yao, M. L. Chen, X. J. Sheng, et al., “A multi-class tactile brain–computer interface based on stimulus-induced oscillatory dynamics,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 26, no. 1, pp. 3–10, 2018. DOI: 10.1109/TNSRE.2017.2731261

|

| [29] |

L. Yao, X. J. Sheng, N. Mrachacz-Kersting, et al., “Performance of brain–computer interfacing based on tactile selective sensation and motor imagery,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 26, no. 1, pp. 60–68, 2018. DOI: 10.1109/TNSRE.2017.2769686

|

| [30] |

W. B. Yi, S. Qiu, K. Wang, et al., “Enhancing performance of a motor imagery based brain–computer interface by incorporating electrical stimulation-induced SSSEP,” Journal of Neural Engineering, vol. 14, no. 2, article no. 026002, 2017. DOI: 10.1088/1741-2552/aa5559

|

| [31] |

H. Serby, E. Yom-Tov, and G. F. Inbar, “An improved P300-based brain-computer interface,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 13, no. 1, pp. 89–98, 2005. DOI: 10.1109/TNSRE.2004.841878

|

| [32] |

C. Guger, S. Daban, E. Sellers, et al., “How many people are able to control a P300-based brain-computer interface (BCI)?,” Neuroscience Letters, vol. 462, no. 1, pp. 94–98, 2009. DOI: 10.1016/j.neulet.2009.06.045

|

| [33] |

J. N. Mak, Y. Arbel, J. W. Minett, et al., “Optimizing the P300-based brain-computer interface: Current status, limitations and future directions,” Journal of Neural Engineering, vol. 8, no. 2, article no. 025003, 2011. DOI: 10.1088/1741-2560/8/2/025003

|

| [34] |

R. Fazel-Rezai, B. Z. Allison, C. Guger, et al., “P300 brain computer interface: Current challenges and emerging trends,” Frontiers in Neuroengineering, vol. 5, article no. 14, 2012. DOI: 10.3389/fneng.2012.00014

|

| [35] |

X. G. Chen, Z. K. Chen, S. Gao, et al., “A high-ITR SSVEP-based BCI speller,” Brain-Computer Interfaces, vol. 1, no. 3-4, pp. 181–191, 2014. DOI: 10.1080/2326263x.2014.944469

|

| [36] |

X. Chen, Y. J. Wang, M. Nakanishi, et al., “High-speed spelling with a noninvasive brain-computer interface,” Proceedings of the National Academy of Sciences of the United States of America, vol. 112, no. 44, pp. E6058–E6067, 2015. DOI: 10.1073/pnas.1508080112

|

| [37] |

M. Nakanishi, Y. J. Wang, X. G. Chen, et al., “Enhancing detection of SSVEPs for a high-speed brain speller using task-related component analysis,” IEEE Transactions on Biomedical Engineering, vol. 65, no. 1, pp. 104–112, 2018. DOI: 10.1109/TBME.2017.2694818

|

| [38] |

D. E. Broadbent and M. H. P. Broadbent, “From detection to identification: Response to multiple targets in rapid serial visual presentation,” Perception & Psychophysics, vol. 42, no. 2, pp. 105–113, 1987. DOI: 10.3758/bf03210498

|

| [39] |

L. Acqualagna and B. Blankertz, “Gaze-independent BCI-spelling using rapid serial visual presentation (RSVP),” Clinical Neurophysiology, vol. 124, no. 5, pp. 901–908, 2013. DOI: 10.1016/j.clinph.2012.12.050

|

| [40] |

S. E. Zhang, X. G. Chen, Y. J. Wang, et al., “Visual field inhomogeneous in brain–computer interfaces based on rapid serial visual presentation,” Journal of Neural Engineering, vol. 19, no. 1, article no. 016015, 2022. DOI: 10.1088/1741-2552/ac4a3e

|

| [41] |

H. Riechmann, A. Finke, and H. Ritter, “Using a cVEP-based brain-computer interface to control a virtual agent,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 24, no. 6, pp. 692–699, 2016. DOI: 10.1109/TNSRE.2015.2490621

|

| [42] |

N. Kaongoen and S. Jo, “The effect of selective attention on multiple ASSRs for future BCI application,” in 2017 5th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Korea (South), pp. 9–12, 2017.

|

| [43] |

H. Higashi, T. M. Rutkowski, Y. Washizawa, et al., “EEG auditory steady state responses classification for the novel BCI,” in 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, pp. 4576–4579, 2011.

|

| [44] |

G. Z. Cao, J. Xie, G. H. Xu, et al., “Two frequencies sequential coding for the ASSR-based brain-computer interface application,” in 2019 IEEE International Conference on Real-time Computing and Robotics (RCAR), Irkutsk, Russia, pp. 170–174, 2019.

|

| [45] |

K. Liu, Z. L. Yao, L. Zheng, et al., “A high-frequency SSVEP-BCI system based on a 360 Hz refresh rate,” Journal of Neural Engineering, vol. 20, no. 4, article no. 046042, 2023. DOI: 10.1088/1741-2552/acf242

|

| [46] |

W. Q. Yan, G. H. Xu, M. Li, et al., “Steady-state motion visual evoked potential (SSMVEP) based on equal luminance colored enhancement,” PLoS One, vol. 12, no. 1, article no. e0169642, 2017. DOI: 10.1371/journal.pone.0169642

|

| [47] |

Y. K. Sun, L. Y. Liang, J. N. Sun, et al., “A binocular vision SSVEP brain-computer interface paradigm for dual-frequency modulation,” IEEE Transactions on Biomedical Engineering, vol. 70, pp. 1172–1181, 2023. DOI: 10.1109/TBME.2022.3212192

|

| [48] |

L. Y. Liang, J. J. Lin, C. Yang, et al., “Optimizing a dual-frequency and phase modulation method for SSVEP-based BCIs,” Journal of Neural Engineering, vol. 17, no. 4, article no. 046026, 2020. DOI: 10.1088/1741-2552/abaa9b

|

| [49] |

Y. K. Sun, Y. H. Li, Y. Z. Chen, et al., “Efficient dual-frequency SSVEP brain-computer interface system exploiting interocular visual resource disparities,” Expert Systems with Applications, vol. 252, article no. 124144, 2024. DOI: 10.1016/j.eswa.2024.124144

|

| [50] |

Y. K. Sun, L. Y. Liang, Y. H. Li, et al., “Dual-Alpha: A large EEG study for dual-frequency SSVEP brain–computer interface,” GigaScience, vol. 13, article no. giae041, 2024. DOI: 10.1093/gigascience/giae041

|

| [51] |

W. L. Zheng and B. L. Lu, “Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks,” IEEE Transactions on Autonomous Mental Development, vol. 7, no. 3, pp. 162–175, 2015. DOI: 10.1109/TAMD.2015.2431497

|

| [52] |

E. P. Torres, E. A. Torres, M. Hernández-Álvarez, et al., “EEG-based BCI emotion recognition: A survey,” Sensors, vol. 20, no. 18, article no. 5083, 2020. DOI: 10.3390/s20185083

|

| [53] |

X. W. Wang, D. Nie, and B. L. Lu, “Emotional state classification from EEG data using machine learning approach,” Neurocomputing, vol. 129, pp. 94–106, 2014. DOI: 10.1016/j.neucom.2013.06.046

|

| [54] |

A. Kreilinger, C. Neuper, and G. R. Müller-Putz, “Error potential detection during continuous movement of an artificial arm controlled by brain–computer interface,” Medical & Biological Engineering & Computing, vol. 50, no. 3, pp. 223–230, 2012. DOI: 10.1007/s11517-011-0858-4

|

| [55] |

A. Buttfield, P. W. Ferrez, and J. R. Millan, “Towards a robust BCI: Error potentials and online learning,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 14, no. 2, pp. 164–168, 2006. DOI: 10.1109/TNSRE.2006.875555

|

| [56] |

Y. K. Sun, X. G. Chen, B. C. Liu, et al., “Signal acquisition of brain–computer interfaces: A medical-engineering crossover perspective review,” Fundamental Research, vol. 5, no. 1, pp. 3–16, 2025. DOI: 10.1016/j.fmre.2024.04.011

|

| [57] |

S. Vaid, P. Singh, and C. Kaur, “EEG signal analysis for BCI interface: A review,” in 2015 Fifth International Conference on Advanced Computing & Communication Technologies, Haryana, India, pp. 143–147, 2015.

|

| [58] |

P. Hansen, M. Kringelbach, and R. Salmelin, MEG: An Introduction to Methods. Oxford University Press, Oxford, UK, 2010.

|

| [59] |

P. T. Lin, K. Sharma, T. Holroyd, et al., “A high performance MEG based BCI using single trial detection of human movement intention,” in Functional Brain Mapping and the Endeavor to Understand the Working Brain, F. Signorelli and D. Chirchiglia, Eds. InTech, Rijeka, Croatia, pp. 17–36, 2013.

|

| [60] |

S. T. Foldes, D. J. Weber, and J. L. Collinger, “MEG-based neurofeedback for hand rehabilitation,” Journal of NeuroEngineering and Rehabilitation, vol. 12, no. 1, article no. 85, 2015. DOI: 10.1186/s12984-015-0076-7

|

| [61] |

D. Dash, A. Wisler, P. Ferrari, et al., “MEG sensor selection for neural speech decoding,” IEEE Access, vol. 8, pp. 182320–182337, 2020. DOI: 10.1109/access.2020.3028831

|

| [62] |

N. Naseer and K. S. Hong, “fNIRS-based brain-computer interfaces: A review,” Frontiers in Human Neuroscience, vol. 9, article no. 3, 2015. DOI: 10.3389/fnhum.2015.00003

|

| [63] |

J. Zhang, X. H. Lin, G. Y. Fu, et al., “Mapping the small-world properties of brain networks in deception with functional near-infrared spectroscopy,” Scientific Reports, vol. 6, article no. 25297, 2016. DOI: 10.1038/srep25297

|

| [64] |

S. Coyle, T. Ward, C. Markham, et al., “On the suitability of near-infrared (NIR) systems for next-generation brain-computer interfaces,” Physiological Measurement, vol. 25, no. 4, pp. 815–822, 2004. DOI: 10.1088/0967-3334/25/4/003

|

| [65] |

A. Abdalmalak, D. Milej, L. C. M. Yip, et al., “Assessing time-resolved fNIRS for brain-computer interface applications of mental communication,” Frontiers in Neuroscience, vol. 14, article no. 105, 2020. DOI: 10.3389/fnins.2020.00105

|

| [66] |

E. M. Maynard, C. T. Nordhausen, and R. A. Normann, “The Utah intracortical electrode array: A recording structure for potential brain-computer interfaces,” Electroencephalography and Clinical Neurophysiology, vol. 102, no. 3, pp. 228–239, 1997. DOI: 10.1016/s0013-4694(96)95176-0

|

| [67] |

R. J. Vetter, J. C. Williams, J. F. Hetke, et al., “Chronic neural recording using silicon-substrate microelectrode arrays implanted in cerebral cortex,” IEEE Transactions on Biomedical Engineering, vol. 51, no. 6, pp. 896–904, 2004. DOI: 10.1109/TBME.2004.826680

|

| [68] |

J. Parvizi and S. Kastner, “Promises and limitations of human intracranial electroencephalography,” Nature Neuroscience, vol. 21, no. 4, pp. 474–483, 2018. DOI: 10.1038/s41593-018-0108-2

|

| [69] |

L. Koessler, T. Cecchin, S. Colnat-Coulbois, et al., “Catching the invisible: Mesial temporal source contribution to simultaneous EEG and SEEG recordings,” Brain Topography, vol. 28, no. 1, pp. 5–20, 2015. DOI: 10.1007/s10548-014-0417-z

|

| [70] |

T. Ball, M. Kern, I. Mutschler, et al., “Signal quality of simultaneously recorded invasive and non-invasive EEG,” NeuroImage, vol. 46, no. 3, pp. 708–716, 2009. DOI: 10.1016/j.neuroimage.2009.02.028

|

| [71] |

B. Dutta, A. Andrei, T. D. Harris, et al., “The neuropixels probe: A CMOS based integrated microsystems platform for neuroscience and brain-computer interfaces,” in 2019 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, pp. 10.1. 1–10.1. 4, 2019.

|

| [72] |

S. Guan, J. Wang, X. Gu, et al., “Elastocapillary self-assembled neurotassels for stable neural activity recordings,” Science Advances, vol. 5, no. 3, article no. eaav2842, 2019. DOI: 10.1126/sciadv.aav2842

|

| [73] |

Z. T, Zhao, X. Li, F. He, et al., “Parallel, minimally-invasive implantation of ultra-flexible neural electrode arrays,” Journal of Neural Engineering, vol. 16, no. 3, article no. 035001, 2019. DOI: 10.1088/1741-2552/ab05b6

|

| [74] |

S. Y. Zhao, G. Li, C. J. Tong, et al., “Full activation pattern mapping by simultaneous deep brain stimulation and fMRI with graphene fiber electrodes,” Nature Communications, vol. 11, no. 1, article no. 1788, 2020. DOI: 10.1038/s41467-020-15570-9

|

| [75] |

Y. Zhou, C. Gu, J. Z. Liang, et al., “A silk-based self-adaptive flexible opto-electro neural probe,” Microsystems & Nanoengineering, vol. 8, no. 1, article no. 118, 2022. DOI: 10.1038/s41378-022-00461-4

|

| [76] |

T. J. Oxley, N. L. Opie, S. E. John, et al., “Minimally invasive endovascular stent-electrode array for high-fidelity, chronic recordings of cortical neural activity,” Nature Biotechnology, vol. 34, no. 3, pp. 320–327, 2016. DOI: 10.1038/nbt.3428

|

| [77] |

N. Opie, “The StentrodeTM neural interface system,” in Brain-Computer Interface Research, C. Guger, B. Z. Allison, and M. Tangermann, Eds. Springer, Cham, Switzerland, pp. 127–132, 2021.

|

| [78] |

D. H. Jeong and J. Jeong, “In-Ear EEG based attention state classification using echo state network,” Brain Sciences, vol. 10, no. 6, article no. 321, 2020. DOI: 10.3390/brainsci10060321

|

| [79] |

Z. H. Wang, N. L. Shi, Y. C. Zhang, et al., “Conformal in-ear bioelectronics for visual and auditory brain-computer interfaces,” Nature Communications, vol. 14, no. 1, article no. 4213, 2023. DOI: 10.1038/s41467-023-39814-6

|

| [80] |

Y. K. Sun, A. R. Shen, C. L. Du, et al., “A real-time non-implantation Bi-directional brain-computer interface solution without stimulation artifacts,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 31, pp. 3566–3575, 2023. DOI: 10.1109/TNSRE.2023.3311750

|

| [81] |

Y. K. Sun, A. R. Shen, J. N. Sun, et al., “Minimally invasive local-skull electrophysiological modification with piezoelectric drill,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 30, pp. 2042–2051, 2022. DOI: 10.1109/TNSRE.2022.3192543

|

| [82] |

Y. K. Sun, Y. X. Gao, A. R. Shen, et al., “Creating ionic current pathways: A non-implantation approach to achieving cortical electrical signals for brain-computer interface,” Biosensors and Bioelectronics, vol. 268, article no. 116882, 2025. DOI: 10.1016/j.bios.2024.116882

|

| [83] |

S. Makeig, A. J. Bell, T. P. Jung, et al., “Independent component analysis of electroencephalographic data,” in Proceedings of the 9th International Conference on Neural Information Processing Systems, Denver, Colorado, pp. 145–151, 1995.

|

| [84] |

H. Adeli, Z. Q. Zhou, and N. Dadmehr, “Analysis of EEG records in an epileptic patient using wavelet transform,” Journal of Neuroscience Methods, vol. 123, no. 1, pp. 69–87, 2003. DOI: 10.1016/S0165-0270(02)00340-0

|

| [85] |

A. Rakotomamonjy and V. Guigue, “BCI competition III: Dataset II-ensemble of SVMs for BCI P300 speller,” IEEE Transactions on Biomedical Engineering, vol. 55, no. 3, pp. 1147–1154, 2008. DOI: 10.1109/TBME.2008.915728

|

| [86] |

J. Jin, B. Z. Allison, C. Brunner, et al., “P300 Chinese input system based on Bayesian LDA,” Biomedizinische Technik/Biomedical Engineering, vol. 55, no. 1, pp. 5–18, 2010. DOI: 10.1515/bmt.2010.003

|

| [87] |

Z. L. Lin, C. S. Zhang, W. Wu, et al., “Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs,” IEEE Transactions on Biomedical Engineering, vol. 53, no. 12, pp. 2610–2614, 2006. DOI: 10.1109/TBME.2006.886577

|

| [88] |

H. Ramoser, J. Muller-Gerking, and G. Pfurtscheller, “Optimal spatial filtering of single trial EEG during imagined hand movement,” IEEE Transactions on Rehabilitation Engineering, vol. 8, no. 4, pp. 441–446, 2000. DOI: 10.1109/86.895946

|

| [89] |

K. K. Ang, Z. Y. Chin, H. H. Zhang, et al., “Filter bank common spatial pattern (FBCSP) in brain-computer interface,” in 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, pp. 2390–2397, 2008.

|

| [90] |

J. Jin, R. C. Xiao, I. Daly, et al., “Internal feature selection method of CSP based on L1-norm and Dempster–Shafer theory,” IEEE Transactions on Neural Networks and Learning Systems, vol. 32, no. 11, pp. 4814–4825, 2021. DOI: 10.1109/TNNLS.2020.3015505

|

| [91] |

V. J. Lawhern, A. J. Solon, N. R. Waytowich, et al., “EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces,” Journal of Neural Engineering, vol. 15, no. 5, article no. 056013, 2018. DOI: 10.1088/1741-2552/aace8c

|

| [92] |

S. M. Abdelfattah, G. M. Abdelrahman, and M. Wang, “Augmenting the size of EEG datasets using generative adversarial networks,” in 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, pp. 1–6, 2018.

|

| [93] |

M. M. Moore, “Real-world applications for brain-computer interface technology,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 11, no. 2, pp. 162–165, 2003. DOI: 10.1109/TNSRE.2003.814433

|

| [94] |

M. De Vos, M. Kroesen, R. Emkes, et al., “P300 speller BCI with a mobile EEG system: Comparison to a traditional amplifier,” Journal of Neural Engineering, vol. 11, no. 3, article no. 036008, 2014. DOI: 10.1088/1741-2560/11/3/036008

|

| [95] |

S. H. Lee, M. Lee, and S. W. Lee, “Neural decoding of imagined speech and visual imagery as intuitive paradigms for BCI communication,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 28, no. 12, pp. 2647–2659, 2020. DOI: 10.1109/TNSRE.2020.3040289

|

| [96] |

F. R. Willett, E. M. Kunz, C. F. Fan, et al., “A high-performance speech neuroprosthesis,” Nature, vol. 620, no. 7976, pp. 1031–1036, 2023. DOI: 10.1038/s41586-023-06377-x

|

| [97] |

D. A. Moses, M. K. Leonard, J. G. Makin, et al., “Real-time decoding of question-and-answer speech dialogue using human cortical activity,” Nature Communications, vol. 10, no. 1, article no. 3096, 2019. DOI: 10.1038/s41467-019-10994-4

|

| [98] |

S. L. Metzger, K. T. Littlejohn, A. B. Silva, et al., “A high-performance neuroprosthesis for speech decoding and avatar control,” Nature, vol. 620, no. 7976, pp. 1037–1046, 2023. DOI: 10.1038/s41586-023-06443-4

|

| [99] |

B. J. Edelman, J. Meng, D. Suma, et al., “Noninvasive neuroimaging enhances continuous neural tracking for robotic device control,” Science Robotics, vol. 4, no. 31, article no. eaaw6844, 2019. DOI: 10.1126/scirobotics.aaw6844

|

| [100] |

H. T. Wang, T. Li, and Z. F. Huang, “Remote control of an electrical car with SSVEP-Based BCI,” in 2010 IEEE International Conference on Information Theory and Information Security, Beijing, China, pp. 837–840, 2010.

|

| [101] |

A. L. Benabid, T. Costecalde, A. Eliseyev, et al., “An exoskeleton controlled by an epidural wireless brain-machine interface in a tetraplegic patient: A proof-of-concept demonstration,” The Lancet Neurology, vol. 18, no. 12, pp. 1112–1122,Dec, 2019. DOI: 10.1016/S1474-4422(19)30321-7

|

| [102] |

J. Y. Long, Y. Q. Li, T. Y. Yu, et al., “Target selection with hybrid feature for BCI-based 2-D cursor control,” IEEE Transactions on Biomedical Engineering, vol. 59, no. 1, pp. 132–140, 2012. DOI: 10.1109/TBME.2011.2167718

|

| [103] |

Y. Q. Li, J. Y. Long, T. Y. Yu, et al., “An EEG-based BCI system for 2-D cursor control by combining Mu/Beta rhythm and P300 potential,” IEEE Transactions on Biomedical Engineering, vol. 57, no. 10, pp. 2495–2505, 2010. DOI: 10.1109/TBME.2010.2055564

|

| [104] |

Y. Yu, Y. D. Liu, J. Jiang, et al., “An asynchronous control paradigm based on sequential motor imagery and its application in wheelchair navigation,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 26, no. 12, pp. 2367–2375, 2018. DOI: 10.1109/TNSRE.2018.2881215

|

| [105] |

Y. Yu, Z. T. Zhou, Y. D. Liu, et al., “Self-paced operation of a wheelchair based on a hybrid brain-computer interface combining motor imagery and P300 potential,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 25, no. 12, pp. 2516–2526, 2017. DOI: 10.1109/TNSRE.2017.2766365

|

| [106] |

R. Leeb, D. Friedman, G. R. Müller-Putz, et al., “Self-paced (asynchronous) BCI control of a wheelchair in virtual environments: A case study with a tetraplegic,” Computational Intelligence and Neuroscience, vol. 2007, article no. 079642, 2007. DOI: 10.1155/2007/79642

|

| [107] |

Q. B. Zhao, L. Q. Zhang, and A. Cichocki, “EEG-based asynchronous BCI control of a car in 3D virtual reality environments,” Chinese Science Bulletin, vol. 54, no. 1, pp. 78–87, 2009. DOI: 10.1007/s11434-008-0547-3

|

| [108] |

M. Li, F. Li, J. H. Pan, et al., “The MindGomoku: An online P300 BCI game based on Bayesian deep learning,” Sensors, vol. 21, no. 5, article no. 1613, 2021. DOI: 10.3390/s21051613

|

| [109] |

A. Lecuyer, F. Lotte, R. B. Reilly, et al., “Brain-computer interfaces, virtual reality, and videogames,” Computer, vol. 41, no. 10, pp. 66–72, 2008. DOI: 10.1109/mc.2008.410

|

| [110] |

H. Lorach, A. Galvez, V. Spagnolo, et al., “Walking naturally after spinal cord injury using a brain–spine interface,” Nature, vol. 618, no. 7963, pp. 126–133, 2023. DOI: 10.1038/s41586-023-06094-5

|

| [111] |

Y. Chen, G. K. Zhang, L. X. Guan, et al., “Progress in the development of a fully implantable brain-computer interface: The potential of sensing-enabled neurostimulators,” National Science Review, vol. 9, no. 10, article no. nwac099, 2022. DOI: 10.1093/nsr/nwac099

|

| [112] |

S. Little, A. Pogosyan, S. Neal, et al., “Adaptive deep brain stimulation in advanced Parkinson disease,” Annals of Neurology, vol. 74, no. 3, pp. 449–457, 2013. DOI: 10.1002/ana.23951

|

| [113] |

C. Neuper and G. Pfurtscheller, “Neurofeedback training for BCI control,” in Brain-Computer Interfaces: Revolutionizing Human-Computer Interaction, B. Graimann, G. Pfurtscheller, and B. Allison, Eds. Springer, Berlin Heidelberg, Germany, pp. 65–78, 2010.

|

| [114] |

J. N. Sun, J. He, and X. R. Gao, “Neurofeedback training of the control network in children improves brain computer interface performance,” Neuroscience, vol. 478, pp. 24–38, 2021. DOI: 10.1016/j.neuroscience.2021.08.010

|

| [115] |

T. Sollfrank, A. Ramsay, S. Perdikis, et al., “The effect of multimodal and enriched feedback on SMR-BCI performance,” Clinical Neurophysiology, vol. 127, no. 1, pp. 490–498, 2016. DOI: 10.1016/j.clinph.2015.06.004

|

| [116] |

R. Leeb, K. Gwak, D. S. Kim, et al., “Freeing the visual channel by exploiting vibrotactile BCI feedback,” in 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, pp. 3093–3096, 2013.

|

| [117] |

K. K. Ang, C. T. Guan, K. S. Phua, et al., “Facilitating effects of transcranial direct current stimulation on motor imagery brain-computer interface with robotic feedback for stroke rehabilitation,” Archives of Physical Medicine and Rehabilitation, vol. 96, no. 3, pp. S79–S87, 2015. DOI: 10.1016/j.apmr.2014.08.008

|

| [118] |

J. A. Wilson, L. M. Walton, M. Tyler, et al., “Lingual electrotactile stimulation as an alternative sensory feedback pathway for brain–computer interface applications,” Journal of Neural Engineering, vol. 9, no. 4, article no. 045007, 2012. DOI: 10.1088/1741-2560/9/4/045007

|

| [119] |

J. D. Shi and Y. Fang, “Flexible and implantable microelectrodes for chronically stable neural interfaces,” Advanced Materials, vol. 31, no. 45, article no. 1804895, 2019. DOI: 10.1002/adma.201804895

|

| [120] |

X. Strakosas, H. Biesmans, T. Abrahamsson, et al., “Metabolite-induced in vivo fabrication of substrate-free organic bioelectronics,” Science, vol. 379, no. 6634, pp. 795–802, 2023. DOI: 10.1126/science.adc9998

|

| [121] |

J. A. Goding, A. D. Gilmour, U. A. Aregueta‐Robles, et al., “Living bioelectronics: Strategies for developing an effective long‐term implant with functional neural connections,” Advanced Functional Materials, vol. 28, no. 12, article no. 1702969, 2018. DOI: 10.1002/adfm.201702969

|

| [122] |

J. Prox, B. Seicol, H. Qi, et al., “Toward living neuroprosthetics: Developing a biological brain pacemaker as a living neuromodulatory implant for improving parkinsonian symptoms,” Journal of Neural Engineering, vol. 18, no. 4, article no. 046081, 2021. DOI: 10.1088/1741-2552/ac02dd

|

| [123] |

B. C. Liu, X. G. Chen, Y. J. Wang, et al., “Promoting brain–computer interface in China by BCI controlled robot contest in world robot contest,” Brain Science Advances, vol. 8, no. 2, pp. 79–81, 2022. DOI: 10.26599/BSA.2022.9050015

|